Jun 26 #34

We started with a deep dive on what we mean when talking about “thoughts”. Turns out not surprisingly that we had quite different views, though with some commonality. There seemed to be a core of language-oriented stream of consciousness thoughts we all agreed on, but once we strayed into the territory of memories, perceptions, sensations, non-symbolic, and subconscious thoughts the less there was any consensus on whether these counted. Freud’s massive influence was acknowledged but not at all venerated, quite the opposite.

I recounted a story I heard Nick Chater tell on a podcast (Jim Rutt not Sam Harris)

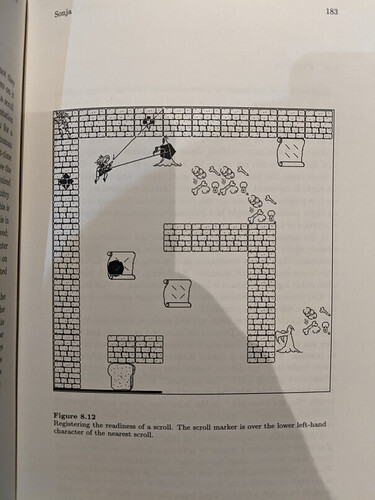

Chapman noted in footnote 3:

This is also the easiest aspect of human active vision to study scientifically, because eye tracking apparatus can determine where you are looking, with high precision, as you move your eyes around.

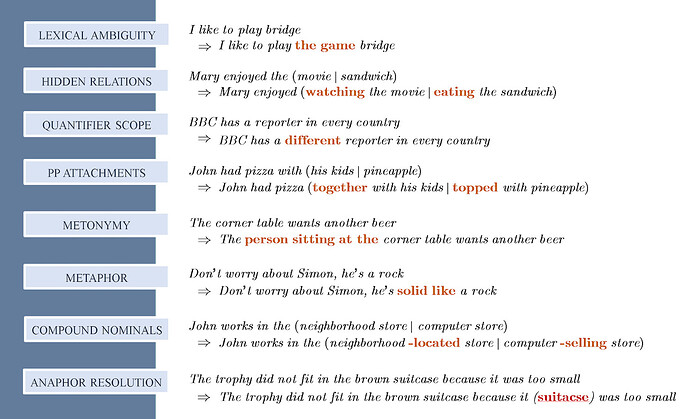

Chater described an experiment that used eye-tracking to show the subject a screen of text but only the part of the screen the subject was looking at was the actual text, the rest of the screen had constantly changing text. To the subject it looked like a normal page but for anyone else it was constantly shifting page of gibberish.

@Evan_McMullen expressed strong skepticism that it was true that the mind is flat. As far as I recall Chater would say the depth of the mind is an illusion kind of like how our field of view has the illusion of detail, but only because it is detailed only wherever we are looking. The mind appears deep only because it has depth whenever we look for depth, if that makes sense.

@Sahil was surprised I didn’t object to Chapman’s objection to objective perception:

“Objective” would mean that it is independent of your theories, of your projects, and of anything that cannot be sensed at this moment, such as recent events. We saw that, for several in-principle reasons, this seems impossible.

Even though I would have defended an objective reality a few years ago, I’ve changed my mind(!) since researching QM and reading Hoffman, and almost certainly would not defend objective perception as that sounds incoherent to me (like objective value is incoherent, another tangent). I’m not sure where our wires got crossed.

Mandatory Joscha Bach reference…

A discussion on why some philosophers make a career around incoherent thought experiments like p-zombies cough Chalmers cough led to Graeber’s concept of BS jobs:

We agreed the difference between Dennett and Chalmers, between good faith and bad faith philosophy, would be very difficult to distinguish as an outsider.

I mentioned that I had met both Dennett and Chalmers who were a gentleman and an asshole respectively. To be fair I met Chalmers a very long time ago at the Santa Fe Institute when he was still a grad student so he may have changed a lot since then.

As always, much appreciation to the crew for another stimulating discussion!

)

)